Immersive Exploration of Brain Simulation Data

Our entry to the 2023 IEEE SciVis Contest

We are excited to share that this project won the second prize in the IEEE SciVis contest 2023 at Melbourne, Australia!

The Aarhus University IEEE SciVis Contest Team

Corneliu Boboc, Joachim Brendborg, Marius Hogräfer, Andreas Asferg Jacobsen, Jacob Møller Kjeldsen, Davide Mottin, Ken Pfeuffer, Asger Ullersted Rasmussen, Troels Rasmussen, Juan Manuel Rodriguez, Mathias Ryborg, Zeinab Schäfer, Hans-Jörg Schulz, Vidur Singh, Theodor TollersrudSubmission Video

Abstract

Our submission tackles the visual analysis of the brain simulation through the use of VR. To that end, we built a VR software framework that allows for immersive exploration of the data. At its core, our solution builds on a hierarchical clustering of the brain network, which is used throughout our software framework - from the organization of the database backend to the interactive adjustment of the level of detail in the frontend. The framework integrates customized methods for clustering, extraction of perceptually important points, 3D edge-bundling, and tangible interaction to realize a fluid analytic workflow on the given simulation data. Two domain experts used our framework to explore the contest data and we report on some of their observations and explanations.

Team Introduction Video

Selected Features

Tactile Interaction

To make it easier to communicate insights with domain experts outside of VR, we have created a physical 3D model of the brain as a tangible and trackable input device to our framework. Not only is the 3D view synced with the orientation of the physical model, but the model can be tapped with a stylus-like pointer and the cluster corresponding to the tapped region will then unfold into its subclusters in the 3D view.

Space-filling Curves

To place multiple simulation trajectories in a line chart, we needed to order the trajectories of clusters/neurons to maintain their spatial proximity as observed in the 3D neuronal network view as best as possible. This was achieved by constructing a space-filling curve across all neurons and clusters. Yet, a 3D space-filling curve does not work well in this case, as all neurons lie on the surface of the brain, leaving it hollow, which creates rather unreasonable curves/linear orders. Making use of the likeness between neurons on the brain's surface and points on a globe, we first applied a map projection to the 3D data and then used a 2D space-filling curve on the resulting "map of the brain". After testing various projections and space-filling curves, we chose a combination of the Van der Grinten projection and a Hilbert curve that gave us the best overall fit.

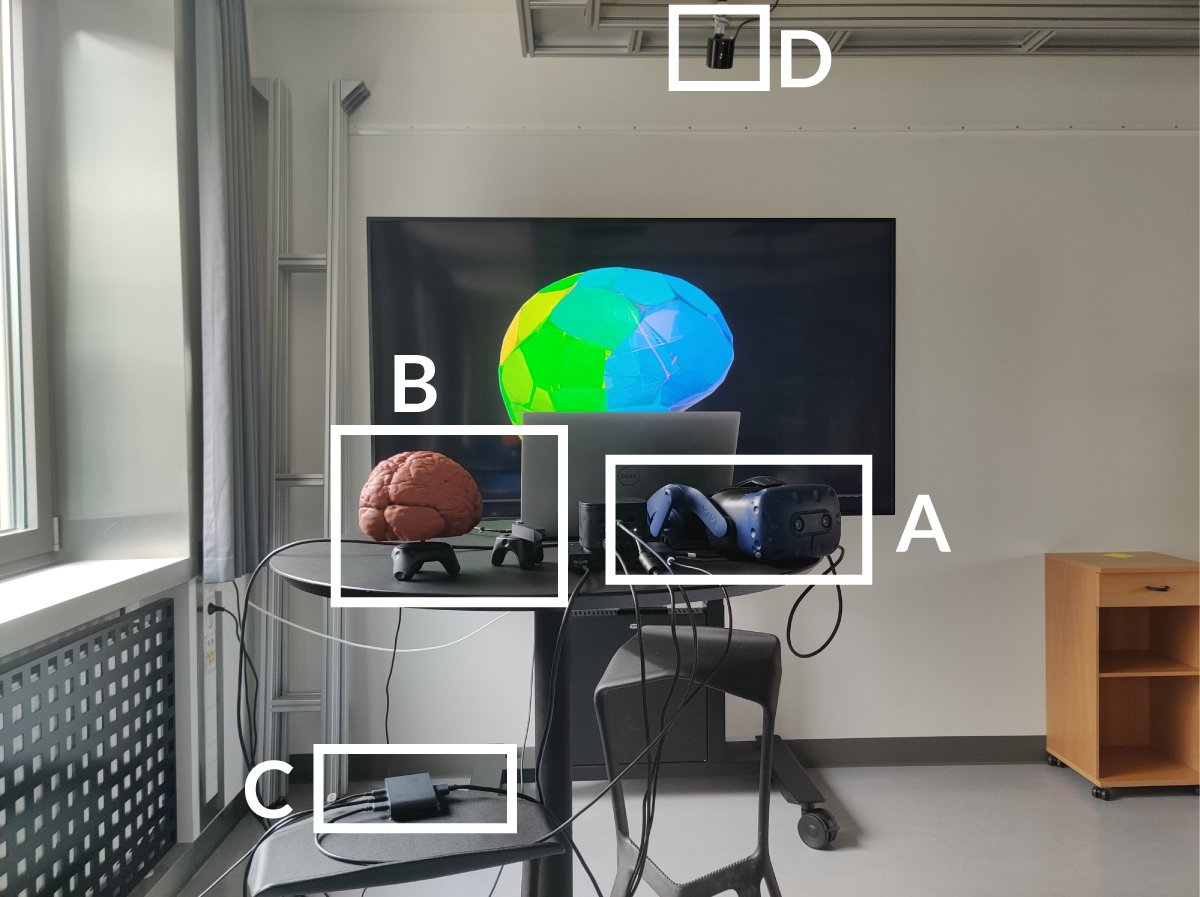

Setup

The platform for our submission is a HTC Vive Pro 2 head-mounted display (HMD), controlled with 2 HTC Vive controllers. The physical brain model for the tangible interaction was created by slicing the 3D brain scan using UltiMaker Cura and 3D-printing it using a Prusa I3 Mk3 printer. The stylus was manually modeled in AutoDesk Fusion 360 and likewise 3D printed. Both were then outfitted with HTC Vive trackers. Four HTC SteamVR Base Stations 2.0 provide the HMD, the controllers, and the trackers with data on their position and orientation (using this Vive tracker profile), which are then communicated to Unity through an HTC Vive Link Box and SteamVR.